Laws of Physics And the Word of God

by Daniel Gregg

(under construction.

This is a test facility. Since I have nothing to hide, and often improve

my ideas, you are welcome to look, but there are problems and incompleteness's I

know about that you don't.)

Axiom

1: The universe was originally designed and engineered from the beginning.

Axiom 2: The Creator tended to incorporate pleasing aesthetics and symmetry into

his designs.

Axiom 3: The 'word' of the Creator is his program for implementing the laws of

physics.

These axioms have some fairly stunning implications for science and the search

for an understanding of the universe. The first axiom implies that we may

expect a certain complexity in the design that defies explanation in terms of

blind forces or random chances. Think of a computer programmer

designing a virtual reality program. The programmer will write code

to specify each player in the program and how it behaves. He will

incorporate a random function in the characters to generate apparently

unexpected variety. He will then write code to monitor the behavior

of his program, i.e. to gather information from it about its state.

This information is then used in the code governing the program as an input to

the laws of the program.

These axioms should teach us a lesson. At the fundamental level what

is called 'nature' is not a lump of matter or a blind set of forces.

Rather the fundamental building blocks of reality are units of information with

processing capacity. In the computer world, the simplest coding

programs are called 'finite state automata' or 'Turing machines'.

Consider each fundamental particle of nature -- a proton, neutron, boson, or

quark as a miniature machine that gathers information about its environment and

state, and them computes and executes a response based on the word (i.e. coding)

of the Creator. The Creator has coded conditional code into his

creations, which includes the capacity to gather information to decide which

part of the code to execute. Of a necessity, the needed

information is queried and processed at superluminal speed or even timeless

speed. There is a substructure of building blocks for God's

infinitesimal automata. However, their properties are not defined by

the substructure, but by the information designed into the system.

We may assume that the substructure is only necessary because these are mindless

(i.e. soulless automata), which defines them as physical vs. spiritual.

We are not concerned with the spiritual at this point, but a spirit consists of

pure intelligence or spirit without the need for physical substructure.

This is the other side of reality, which is dualistic.

The soulless automata appear to us in the macro world as 'forces', 'waves', or

'particles'. Often we only perceive a very small part of the

informational content they contain. Only a very small part of

the program they follow is visible to us. For aesthetic reasons much of

the informational machinery has been hidden. In any case, we ascribe

intelligence to creation by virtue of its automatic programming, and ability to

automatically deal with conditions and automatically gather information.

We do not thereby become mystics. These bits of programming are not

self actuated, but were made by the Creator.

When faced with a phenomenon that defies immediate explanation, we may conclude

that our lack of explanation is merely because of a lack of knowledge about how

the Creator programmed the phenomenon to behave. But if we try to

explain the phenomenon of nature with a philosophy of naturalism then we may end

up stuck and at a dead end for an explanation. Naturalism always

seeks to explain the behavior of nature by minimizing the information needed for

its operation. This is akin to the ancient idolatry whose

essence is to ascribe what appears to be more complex to simpler causes.

The old idolatry was to ascribe life to wood, stone, silver and gold.

At least this is what the naturalistic mind does, and then when confronted with

it, often denies it. The modern counterpart is just the same.

Modern man is always trying to explain the ultimate physics in a way that

completely omits informational input into the laws of physics, since clearly

such informational input implies a programmer and a designer.

For example, quantum mechanics defies the natural mind's attempt to explain it

without a dynamic information gathering mechanism in the most fundamental

particles. The subject is largely so confusing because of attempts

to explain it without the principle of dynamic information processing.

Consider

for example, the quantum double slit experiment. A photon of light

approaches two slits (two thin slots very close together). This

photon of light is oscillating along its wave front appearing at all locations

with equal probability before it goes through the slits. The

photon's wave front goes through both slits, but the photon itself is forced to

go through one slit, depending on where it is on the wave front as the wave

front reaches the slits. When the wave front goes through the slits,

it splits into two parts much like a single water wave splits when faced with

gaps in a breakwater. The two halves of the wave front then collide,

creating regions of increased probability for the photon's location, and regions of

decreased probability, again much like water waves colliding create high waves

in some spots, low ones in others, and complete cancellation of waves as a

trough and crest cancel each other out.

Consider

for example, the quantum double slit experiment. A photon of light

approaches two slits (two thin slots very close together). This

photon of light is oscillating along its wave front appearing at all locations

with equal probability before it goes through the slits. The

photon's wave front goes through both slits, but the photon itself is forced to

go through one slit, depending on where it is on the wave front as the wave

front reaches the slits. When the wave front goes through the slits,

it splits into two parts much like a single water wave splits when faced with

gaps in a breakwater. The two halves of the wave front then collide,

creating regions of increased probability for the photon's location, and regions of

decreased probability, again much like water waves colliding create high waves

in some spots, low ones in others, and complete cancellation of waves as a

trough and crest cancel each other out.

Think of a surfer riding his wave. He surfs up and down his wave remaining

in each location with equal time allotted for each part of the wave. Then

his wave goes through a breakwater with two gaps. He has to choose

to go through one side or the other. He chooses. But his wave

changes. No longer is it a single smooth wave that he can ride all parts

of with equal times. Now it is chopped up because after going

through the breakwater, it is interfering with itself, canceling in some spots

and reinforcing in others. The surfer modifies his action.

He surfs only the reinforced spots with equal time, spending little or no time

in the cancelled spots, quickly paddling over them since they are no fun.

Eventually, he reaches the shore (the detection screen) landing at a spot equal

to the probability of a reinforced part of the wave hitting the shore. In

the physics lab, this look like a pattern of light and dark lines, called an

interference pattern.

So the photon particle after passing one of the slits must now ride the changed

wave. No longer does it oscillate back and forth on it with equal

probability at all locations, but it spends more time at the reinforced parts

and little or no time at the cancelled parts. When the wave hits the

detection screen, the photon appears most probably in one of the locations where

the wave reinforced. This is known because the hits of many

photon's are studied,

Now, how does the photon know which parts of its wave to appear in?

Since it goes through only one slit, how does it know the best locations to

'surf', which are only known from the physical configuration of the other slit?

Somehow the wave the part of the photon tells the particle part where it ought

to go. Mind you, the wave does not tell the photon where it must go.

It merely gives it the equivalent of a pair of dice that are likely to come up

with the reinforced locations. Either the photon is collecting information

superluminally, or information is being sent to it by which it modifies its

location in space! It is really hard to chalk this behavior up to a

set of blindly kinetic forces.

But now it gets even weirder. Someone puts a couple of photon

particle detectors just after the slit to try to figure out which one the photon

went through. An indirect "detection method" is used so as to try to

disturb the particle and wave as little as possible, as most detection methods

stop the photon cold and erase the wave. A Vertical polarizer

is placed after one slit, and a horizontal one after the other, such that

the wave part has already interfered with itself at the placement point, but the

particle has not had space to randomize away from the slit it went through.

What the polarizers do is force the wave to "rotate" so that one half is rotated

0 degrees and the other at 90 degrees. What you might think is that

the interference probability code gets rotated one way on one part of the screen

and the other way on the other part of the screen. Not so.

Even though the probability code was created before going through the

polarizers, after going through them, the pattern is no where to be seen on the

screen, not even in two rotated halves. Successive photon's

land on the screen in two spots coding no interference patterns at either spot

as they accumulate. One call tell which slit each particle goes

through, but the particle no longer is surfing the interference pattern.

It is as if the half waves have become a straight plain wave again, and it surfs

with equal time at all parts, even though we expect two half interference waves.

Here is what happens: The photon does not have time to randomize its position

between the slits and the polarizer. It hits the polarizer, and records

the fact that it is 'marked' because it is now reoriented. Being marked,

it does not compute its permitted oscillating locations according to the post

slit interference, but it goes back to the last wave where it was randomized and

not 'read' just before it went through the slit. However, it retains

the fact that its wave is only half as wide now. Somehow, the particle

saved this information. Somehow, it gathers info that it is tagged by the

polarizer, and modifies its behavior!

The situation now gets even weirder. Our experimentalist puts

a 45 degree polarizer in the path of the half waves coming out of both sides of

the slit at some distance after the 0 and 90 degree polarizers.

Guess what? Our successive particle shots start hitting the screen

again probabilistically building up a pattern equal to an interference.

The 0 degree half wave with photon arrives at the 45 polarizer, going straight,

spending equal times at each part of the wave. It gets turned 45

degrees. Meanwhile, it apparently did not forget its other half.

It apparently acquired the vector of its 90 degree half when it was split up at

the dual polarizers. It programs this 'theoretical wave'

through the same 45 polarizer and finds that it will mesh with the half it

already has. It recombines them forming a new interference pattern

based on a projection of the interference pattern just before the split up at

the polarizers. It also knows somehow that its previous tagging at the 0

and 90 degree polarizers is now useless information for locating it as it

randomizes again while meshing with its other half. Thus it locates itself

according to the interference probabilities. However, it does

not perfectly put the patterns back together, as can be seen if the 45 polarizer

is turned 90 degrees to polarize 45 degrees the other way. The

interference patterns are inverse of each other. So we see that the

photon has a spatial memory that holds a vast amount of information concerning

all its states and known potential states. It computes the

probability of where it is supposed to be and modifies it according to its

interactions with other material particles.

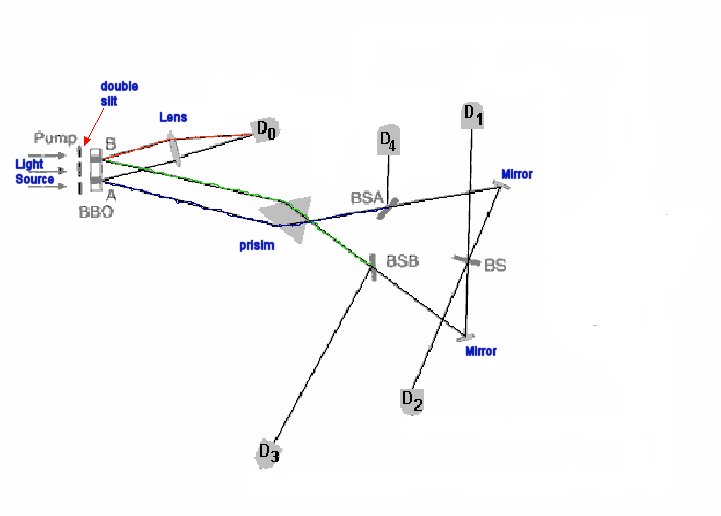

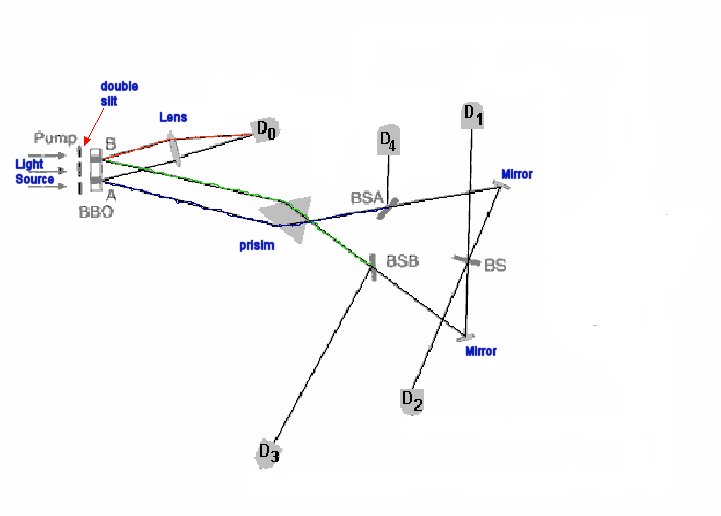

Now things get really crazy with the 'delayed quantum eraser' experiment.

This one is a bit difficult to understand, so I will use a bit of analogy to

describe it. A photon is shot at a double slit. The

photon must choose a slit. The wave part goes through both, and the

interference information is relayed to the photon. But before the

photon can randomize itself on the new probability pattern it is intercepted by

a crystal placed in front of each slit. The crystal destroys the

photon, but before doing so makes two copies of it. The two copies

are called 'entangled photons'. If it goes via slit A, then two

copies come out of the crystal on the A side. If it goes via slit B then

two copies come out of the B side. The nice property of 'entangled

photons' is that whatever information is preserved in one is preserved in the

other, and whatever is determined to be true of one upon examination will be

true of the other, save only that their locations are different. One

photon goes to detector D0.

This photon is detected and displays either an interference landing or a

non-interference landing on the detector before the other photon even reaches

the rest of the experiment. The D0 photon seems to know what

the second photon will do before the second photon does it. Whatever

pattern the D0 photon shows is a perfect prediction of what the second photon

actually does! We can only conclude that the D0 photon asks its

partner what it plans on doing for the rest of the experiment so that it can

arrange to land on the detector with the correct pattern. And it has

to send a request and receive the response at superluminal velocity!

The second photon complies with the request for future information and

determines what it will do in advance by probing the experimental maze with a

superluminal sensor (a theoretical wave front that executes its probability

function and makes all the choices it can. (Probably the only way to upset

the infallibleness of its plans is to rearrange the experimental maze faster

than the speed of light.) It finds a 50% mirror as its first

obstacle. If it plans to reflect to detector D4, it tells the D0

photon to show no interference pattern. But if it plans to go through the

50% mirror it proceeds to the next obstacle, another 50% beam splitter.

But this time it meets its other half wave at the second beam splitter (by its

own space-time computation). Remember that even though the ancestor

photon only went via one slit, that its wave went via both. This

info and the interference pattern was saved by the entangled photon.

It recombines, and randomizes its location by going to either detector D1 or D2.

Having planned this all out and determined which probability path it will take,

the second photon tells the D0 photon to show an interference pattern!

Now things get really crazy with the 'delayed quantum eraser' experiment.

This one is a bit difficult to understand, so I will use a bit of analogy to

describe it. A photon is shot at a double slit. The

photon must choose a slit. The wave part goes through both, and the

interference information is relayed to the photon. But before the

photon can randomize itself on the new probability pattern it is intercepted by

a crystal placed in front of each slit. The crystal destroys the

photon, but before doing so makes two copies of it. The two copies

are called 'entangled photons'. If it goes via slit A, then two

copies come out of the crystal on the A side. If it goes via slit B then

two copies come out of the B side. The nice property of 'entangled

photons' is that whatever information is preserved in one is preserved in the

other, and whatever is determined to be true of one upon examination will be

true of the other, save only that their locations are different. One

photon goes to detector D0.

This photon is detected and displays either an interference landing or a

non-interference landing on the detector before the other photon even reaches

the rest of the experiment. The D0 photon seems to know what

the second photon will do before the second photon does it. Whatever

pattern the D0 photon shows is a perfect prediction of what the second photon

actually does! We can only conclude that the D0 photon asks its

partner what it plans on doing for the rest of the experiment so that it can

arrange to land on the detector with the correct pattern. And it has

to send a request and receive the response at superluminal velocity!

The second photon complies with the request for future information and

determines what it will do in advance by probing the experimental maze with a

superluminal sensor (a theoretical wave front that executes its probability

function and makes all the choices it can. (Probably the only way to upset

the infallibleness of its plans is to rearrange the experimental maze faster

than the speed of light.) It finds a 50% mirror as its first

obstacle. If it plans to reflect to detector D4, it tells the D0

photon to show no interference pattern. But if it plans to go through the

50% mirror it proceeds to the next obstacle, another 50% beam splitter.

But this time it meets its other half wave at the second beam splitter (by its

own space-time computation). Remember that even though the ancestor

photon only went via one slit, that its wave went via both. This

info and the interference pattern was saved by the entangled photon.

It recombines, and randomizes its location by going to either detector D1 or D2.

Having planned this all out and determined which probability path it will take,

the second photon tells the D0 photon to show an interference pattern!

If it goes to D4 we know which slit it came by, but there is no interference

pattern at D0. If to D1 or D2 then we don't know which side the

photon went through. If it goes through the 50% mirror for the other

side and ends up at D3, we know which slit it went through, but then no

interference shows up at D0. If the plan is to go to D1 or D2, they

always show an interference pattern, and when they do D0 has already showed it

beforehand, predicting it infallibly. The photons seem to know if

even the most general information about their previous location is saved

somewhere else (obtained by disturbing it a little). They are

like sly little automata that seem to know exactly when they've been pinned

down, and then they perform no tricks for the observer.

Here is another version of the same experiment:

So how would we explain this experiment in terms of information programming?

Clearly the photon coming down the left path crashes into the detector first.

It always seems to know what will happen to the one on the right path that

crashes into the detector later, i.e. it knows whether it will interfere with

itself creating 'fringes' and does so itself after having determined the case.

The left photon asks its entangled partner on the right to 'determine' the

situation. The entangled partner on the right complies and probes its own

path superluminally, and then sends its predicted plans to the left photon so

that the left photon will know whether to show interference or not.

The only way to fool the left photon would be to move the lens or detector

superluminally after the left photon crashed, but before the right photon

reaches the lens.

We don't have to buy into any of the crap about going backward or forward in

time here, nor about retarded probability waves or advance probability waves.

One has to invoke time travel in order to save the speed of light as the speed

limit on information transfer. Of course, the result of this

experiment does not prove the present affects the past. It only proves

that what seems to be determined in the present was really already determined in

the past. The speed of light, of course, is not the limit on the

speed of information in the universe. We know this by divine

revelation. Prayer travels faster than the speed of light.

The speed of light is a constant if we consider the speed of electromagnetic

radiation a constant. That is correct, and is shown by experiment.

However, that does not disprove for us that non-electromagnetic physics are at

work between the electromagnetic physics. Only one predisposed to

naturalistic simplicity might assume that.

How

the Earth Could Be at the Center

If the earth's center is positioned at vector (0,0,0) =

, which we will call the "point of

origin" for a conceptual Euclidean space frame of reference, then the

gravitational law can be engineered so that it keeps the earth always at the

center of this coordinate system. This requires more

complexity[3]

, to be sure. But the engineer is up to it. It fits the

aesthetic axiom because it would place the divine pinnacle of creation at the

center. And the very fact that God started with the earth and not

with the heavens leads to the probability that the earth was put at the center

and then the heavens were built around it. The engineering

involves making the cosmos go around the earth once a day, and making the sun

the center of a planetary system that revolves around the earth once a year.

Such a model is not new. It is called Tychonian after Tycho Brahe.

Watching a Tychonian system work is like watching a Spiro graph.

, which we will call the "point of

origin" for a conceptual Euclidean space frame of reference, then the

gravitational law can be engineered so that it keeps the earth always at the

center of this coordinate system. This requires more

complexity[3]

, to be sure. But the engineer is up to it. It fits the

aesthetic axiom because it would place the divine pinnacle of creation at the

center. And the very fact that God started with the earth and not

with the heavens leads to the probability that the earth was put at the center

and then the heavens were built around it. The engineering

involves making the cosmos go around the earth once a day, and making the sun

the center of a planetary system that revolves around the earth once a year.

Such a model is not new. It is called Tychonian after Tycho Brahe.

Watching a Tychonian system work is like watching a Spiro graph.

While there are many phenomenon that would need explaining to make such a system

work, like parallax, Foucault's Pendulum, Coriolis Forces, Tides,

differential rotations in the earth's core, and so on, in principle such things

can be engineered. Certainly the purely geometric aspect of the

physics can be translated to a geocentric coordinate system. And

gravity, and all its side effects, are really purely geometric physics.

The gravitational laws relate the position and mass of one object to another,

but they do not give us a mechanism.

Further, Newton's discovery of gravity under the apple tree and relating it to a

force analogy was not what made him famous. No, what made him famous

was his ability to use mathematics and come up with a curve fitting law that

explained and predicted the motions of heavenly bodies based on their masses,

distances, and velocities. But this does not tell us the final form

of his law, nor does it show a mechanistic reason why it works. And

in general all 'forces' that can be described as 'fields' are in reality

geometric concepts, and have nothing to do with the contact forces by which

objects get pushed or pulled around on earth. This the

Astrophysicists and Cosmologists know. Here is a quotation from

George Ellis:

| '"People need to be aware that there is a range of

models that could explain the observations," Ellis argues. "For

instance, I can construct you a spherically symmetrical universe with

Earth at its center, and you cannot disprove it based on observations."

Ellis has published a paper on this. "You can only exclude it on

philosophical grounds. In my view there is absolutely nothing

wrong in that. What I want to bring into the open is the fact that

we are using philosophical criteria in choosing our models. A lot

of cosmology tires to hide that."' (Scientific American 273 (4): 28-29,

1995). |

How can George Ellis say this? He says this because what we know of

the 'law of gravity' is a geometric relation between two or more objects in

space, reading their masses, computing the distances, and then governing their

motions. In mathematical form, it is simply geometry.

The talk you hear about 'bending space' or 'space warps', etc. by gravity, i.e.

that curves space, are geometrical concepts based on mathematical ideas.

Space does not really warp. What goes as curvature in space, is just

a geometrical concept being imposed on the objects in that space.

The implication that 'space' actually curves to cause the effect described by

the mathematics is not real. I mean it is not real in the

sense that there are no observations of such a thing happening. We

can treat space as if it is full of points and manipulate it mathematically,

twisting, bending, stretching, pulling, crunching. But this does not

make the points real. To treat it as real in some sense is just a

simplistic way of trying to ascribe the motion to the innate properties of the

space.

A theory of gravity is therefore a geometric perspective on the cosmos.

One can make any assumption here, provided the geometry is correct.

The probability of the assumption being correct lies in the domain of philosophy

and metaphysical beliefs. I take mine from divine revelation in the

bible. If one could truly step outside the universe and see it from

the Creator's point of view, then the matter would be resolved instantly.

Meanwhile, we must take his word for it.

Let us stipulate that the average mass particle in the cosmos is rotating

about the earth with a sidereal period

in 23

hours and 56 minutes. (2) The basic assumption is reasoned from a theological

or philosophical point of view and the hints in divine revelation about the true

nature of the earth and the cosmos. I will not give the basis for this at this

point since most people are only curious about how such a proposition might be

scientifically justified. Until now, I suspected the earth could be at

the center of the cosmos without understanding exactly how, but I never pursued

it since I could not pin down what went wrong in Physics since Copernicus.

Now with a better understanding of the results of General Relativity, the

question of the center of the the Cosmos is reopened. The theory

says that there is no absolute center and that therefore one may choose any

point as the center point without violating the laws of physics. The

idea of no center, and a limitless, but unbounded cosmos are geometric

assumptions a priori. This is called the "Cosmological

Principle". Einstein built them into his theory. He did so and

risked the loss of information. Further, he assumed that every point

is like every other point, i.e. that the universe is homogeneous, and that the

universe is the same in every direction (isotropic) - in an average sense,

forever. It is possible to construct such a geometry.

That Einstein's geometry went so far is merely proof of its geometric nature and

the assumption that the physical phenomenon that go with it are covariant

(locally unchanging from point to point). In actual practice, one

has to choose a frame of reference to work in, but given the assumptions of

General Relativity, and its success as a geometric theory, we are free to assume

our own set of coordinates based on biblical ideas.

in 23

hours and 56 minutes. (2) The basic assumption is reasoned from a theological

or philosophical point of view and the hints in divine revelation about the true

nature of the earth and the cosmos. I will not give the basis for this at this

point since most people are only curious about how such a proposition might be

scientifically justified. Until now, I suspected the earth could be at

the center of the cosmos without understanding exactly how, but I never pursued

it since I could not pin down what went wrong in Physics since Copernicus.

Now with a better understanding of the results of General Relativity, the

question of the center of the the Cosmos is reopened. The theory

says that there is no absolute center and that therefore one may choose any

point as the center point without violating the laws of physics. The

idea of no center, and a limitless, but unbounded cosmos are geometric

assumptions a priori. This is called the "Cosmological

Principle". Einstein built them into his theory. He did so and

risked the loss of information. Further, he assumed that every point

is like every other point, i.e. that the universe is homogeneous, and that the

universe is the same in every direction (isotropic) - in an average sense,

forever. It is possible to construct such a geometry.

That Einstein's geometry went so far is merely proof of its geometric nature and

the assumption that the physical phenomenon that go with it are covariant

(locally unchanging from point to point). In actual practice, one

has to choose a frame of reference to work in, but given the assumptions of

General Relativity, and its success as a geometric theory, we are free to assume

our own set of coordinates based on biblical ideas.

The GR equations can be solved for the special

case of earth at  .

All I needed was that at least one solution was available. As for GR itself, which says it is possible to solve the equations this way, I

do not accept its axioms about the Equivalence Principle nor that the speed of

light is the information speed limit. Those assumptions required Einstein to define

time in terms of a local physical clock, and space such that the distance

traveled by light through hyperspace (one of those mathematical concepts in

geometry that exist only in math) divided by the the physical clock time

integrated along the path yielded a constant. I deeply suspect that

Einstein's definitions were motivated by wanting to exclude the biblical point

of view on the cosmos a priori by setting up incompatible definitions.

I do not necessary blame this on the man himself, but on the consensus

philosophy which seeks to avoid the biblical truth. If the speed of

light is constant, then this creates an apparent starlight problem for Genesis

1. This is despite the fact that the problem of starlight has been

solved in the GR framework by reintroducing an absolute clock while letting

local clocks vary such that local time makes the speed of light a constant.

GR has thus disproved itself in the mathematical sense. If the speed

of light is not a constant in absolute time, then what need was there to define

it as a constant in local time?

.

All I needed was that at least one solution was available. As for GR itself, which says it is possible to solve the equations this way, I

do not accept its axioms about the Equivalence Principle nor that the speed of

light is the information speed limit. Those assumptions required Einstein to define

time in terms of a local physical clock, and space such that the distance

traveled by light through hyperspace (one of those mathematical concepts in

geometry that exist only in math) divided by the the physical clock time

integrated along the path yielded a constant. I deeply suspect that

Einstein's definitions were motivated by wanting to exclude the biblical point

of view on the cosmos a priori by setting up incompatible definitions.

I do not necessary blame this on the man himself, but on the consensus

philosophy which seeks to avoid the biblical truth. If the speed of

light is constant, then this creates an apparent starlight problem for Genesis

1. This is despite the fact that the problem of starlight has been

solved in the GR framework by reintroducing an absolute clock while letting

local clocks vary such that local time makes the speed of light a constant.

GR has thus disproved itself in the mathematical sense. If the speed

of light is not a constant in absolute time, then what need was there to define

it as a constant in local time?

If this principle is for the sake of being able to say to the general public

that the speed of light is a constant, such that it take billions of years for

starlight to reach earth, while admitting in the literature that reintroduction

of absolute time, cosmic time

, or earth time, may be made without violating the laws of physics, then clearly

cosmologists are trying to bury something they don't want their adoring public

to know. When the GR community is faced with this charge of simple

deception with a clear motive they tend not to believe it because the world has

invested so many resources in it. If something was wrong, they say, we'd

have found the truth long ago and gotten another theory. Yet,

arxiv.org is full of papers proving that something is wrong. Newton's

laws predicted that light would bend when it went around the sun due to gravity.

Well it was found that light does bend when it goes around the sun, only it was

bent twice as much as Newton's laws predicted. The obvious

explanation of this is that the light also slowed down when coming into the

sun's denser gravity field like light slows when going through glass and

refracts. This fact is proved by experimental data on the time

delays of space probe signals passing by the sun. If the light slows

down, then gravity also has longer to affect it, and thus the bending is

greater.

, or earth time, may be made without violating the laws of physics, then clearly

cosmologists are trying to bury something they don't want their adoring public

to know. When the GR community is faced with this charge of simple

deception with a clear motive they tend not to believe it because the world has

invested so many resources in it. If something was wrong, they say, we'd

have found the truth long ago and gotten another theory. Yet,

arxiv.org is full of papers proving that something is wrong. Newton's

laws predicted that light would bend when it went around the sun due to gravity.

Well it was found that light does bend when it goes around the sun, only it was

bent twice as much as Newton's laws predicted. The obvious

explanation of this is that the light also slowed down when coming into the

sun's denser gravity field like light slows when going through glass and

refracts. This fact is proved by experimental data on the time

delays of space probe signals passing by the sun. If the light slows

down, then gravity also has longer to affect it, and thus the bending is

greater.

But wait a minute. If light at constant velocity bends, then we call

that acceleration. Anything at constant velocity that follows a curve is

acceleration. That is, anything in absolute Euclidean space that

follows a curve in that space at constant velocity is accelerating.

The total distance along the arc around the sun divided by the time refused to

make the speed of light a constant. If it was constant coming in,

then the curving would require it to accelerate. Einstein's solution?

Make the path length straight, taking it through hyperspace, and slow the clock

rate down while one waits for the light to complete the trip through hyperspace.

To get rid of the acceleration required for the curve in real space, Einstein

had to take the ray through imaginary hyperspace to get a "straight line", but

the line needed twice too much straightening out to eliminate the acceleration.

In any case the concept of a geometric straight line in hyperspace is merely

that its algebra satisfies the Pythagorean Theorem in the required number of

co-ordinates. Beyond 3 dimensions, the idea of straightness has no

explanatory validity in real life.

We will reject GR (General Relativity) notions of

curved space and relative time, which

are not necessary to arrive at a geocentric conclusion, but only necessary to

maintain the unproved Cosmological Principle. The first result of

rejecting Einstein's fundamental assumption is that either the earth

is unmoving at the center of the cosmos or it is not. The truth cannot be a

matter of perspective only or choice of a frame of reference, or just a choice

of coordinate system. Einstein's

Equivalence Principle is axiomatically flawed at the cosmological level since

the preferred point of view is that of the Creator. We will now take

up the idea of inertia.

The definition of 'inertia' has been hotly debated. Do objects naturally go in

a straight line when there is no outside force acting on them? Just because an

object appears to go in a straight line when it is not being clearly pushed

around does not mean it is going in a perfectly straight line. There is no

empirical evidence for such a law of inertia. We should not make the mistake

of defining the 'line' with the physics of inertia. Absolutely straight lines

exist with the Euclidean metric, but the law of default motion need not mean an

object must go in an Euclidean line. An object will move as God so decrees

that it moves. Such decrees are defined mathematically so that God does not

have to keep changing his decree from moment to moment. This mathematics

equates to pure geometry. So the default motion of an object without an

outside force acting on it is exactly the path, either straight, or of some

curvature that God has ordained for it to follow sans an outside force.

We need not modify the concept of the absolutely straight Euclidean line to the

observed curvature. The concept of 'curved' is a deviation from the Euclidean

line, and can be measured.

In fact, curvature can be defined in a pure Euclidean geometry. And all curved

non-Euclidean geometries are convertible to the Euclidean geometry.

Here is the proof. To measure something in one place, and then

measure it in another place at another time, one must have a rigid unit of

measurement. One must have some understanding of the causes that may cause

a discrepancy in repeated measurement of the same object from time to time and

place to place, such as temperature, humidity, acceleration, or velocity.

If one does not have a rigid means of measurement with an understanding of the

causes that can affect it, then measuring something from place to place and time

to time is meaningless. One's ruler does not have to be perfectly rigid,

but one has to be able to compensate for the lack of perfection through known

causes. Euclidean geometry needs a new axiom: Axiom 5: A

rigid standard of length exists. This is perfectly true in

theory, and approximately true in reality. Here is the key.

All the non Euclidean geometries depend on the truth of this axiom to work.

Why is that? Well, in order to deny Euclid's 5th postulate about parallel

lines, they must start with a rigid unit of length, and then give a function

about how that length changes from a point of reference within the geometry!

For instance, if the function says that the ruler must get shorter as you move

away from the reference point, which let us say is a point next to a line, then

eventually the lines will meet at the limit of infinity. Thus, there

are no parallel lines in that geometry. But if you give a function whereby

the ruler assumes multiple larger values all regarded as the 'same length' as

the original measurement between the point and the line, as you move away from

the reference point, then you can draw and infinite number of parallel lines

through one point all at different angles. As one moves away from the

point of reference the multi-valued increasing ruler guarantees that none of

these non-Euclidean 'parallel lines' will meet. The non-Euclidean

geometry only seems to work because it changes the rigid unit of measurement

from point to point. It may be self consistent in the mathematical

sense, but it is not linguistically consistent. It dynamically

redefines the notion of a straight line, and the notion of fixed length.

Yet, it uses those ideas as the basis for its varying unit of measure or metric.

Because of this, any non-Euclidean geometry can be reversed engineered into a

mathematically equivalent Euclidean geometry. The actual

non-Euclidean geometry has a problem with language. It is deceptive.

It leads to inaccurate visualization of the case, or inability to correctly

visualize the case. This is due to its acceptance of a shifting

standard of measure, and even though this shifting standard has its shiftiness

defined by a mathematical expression that uses the original absolute (or rigid)

length as an input, it is still deceptive, and allows physics to cover up a lot

of stuff. The proof of its invalidity is in its dependence on the

axiom that a rigid unit of measure exists. For without that axiom

the change function could not be built, and without that the non-Euclidean

geometry would not seem to work. This disproves the original

assumption of non-Euclidean geometry, which does contradict Euclidean geometry,

that no, or more than one parallel line may be drawn via a point beside a line.

Non-Euclidean geometry starts with a notion of fixed length. Here is the

distance function from the

Poincaré disk model

(a non-Euclidean geometry). The distance function begins with two

vectors  .

These are two vectors defined in Euclidean space, where the point of reference

is the origin. That is the tails of the vectors start at the zero vector

in Euclidean space. The lengths given are the normal Euclidean length.

All the inputs on the right side of the equations are Euclidean. The

output on the left side of the second equation gives the non-Euclidean distance

defined in terms of the Euclidean vectors on the right side.

.

These are two vectors defined in Euclidean space, where the point of reference

is the origin. That is the tails of the vectors start at the zero vector

in Euclidean space. The lengths given are the normal Euclidean length.

All the inputs on the right side of the equations are Euclidean. The

output on the left side of the second equation gives the non-Euclidean distance

defined in terms of the Euclidean vectors on the right side.

This was generated using the disk model's distance function. You can see

that the ruler shrinks as it approaches the boundary of the circle, so that

similar structures created at different distances from the origin shrink as the

ruler shrinks. The point is that the geometry can be converted back

to the Euclidean.

Let

us get back to the definition of inertia. Inertia is the path in a given place

that an object will move when no force is acting upon it. Is

inertia a property innate to just one object, or is it the result of a

relationship between many objects? In a one object universe, inertia

has no meaning. Uniform motion cannot be detected in a one

object universe. For this reason, coordinate systems related by a

difference of uniform motion (constant velocity difference) are physically

equivalent. Accelerated motion is a different matter.

Here we must define two types of 'acceleration'. One I will

call 'non-differential acceleration', and the other 'differential acceleration'.

Differential refers to slightly different accelerations in a connected mass

causing internal squashing or stretching of the object. This is because

the mass particles are trying to move at different rates of acceleration due to

their different distances from the second mass in Newton's equation.

Differential acceleration is normal at local scales and can be detected by

tensions in the masses. Non-differential acceleration occurs when

all the mass particles in an object accelerate at the same rate. In

this case, there are no internal tensions by which it can be detected.

This type of acceleration occurs when the whole inertial frame of reference is

accelerating. It cannot be detected within the frame of reference.

The concept of acceleration should

not be confused with 'force'. One object can push against another and

accelerate the other object contrary to its natural acceleration. This is what

we call force. However, the natural acceleration is just the way the laws of

motion in free space, (empty of contacting objects to cause a force), dictate

the object must move. This acceleration is independent of any force. It is

merely the default motion of the object with respect to time.

Only in differential acceleration do actual forces arise internally in the

object accelerating. But this is because different mass particles in

the object are trying to accelerate at different rates, and not because a

'force' is being exerted to cause the acceleration. In other words,

acceleration itself is purely a geometric relation, and any force arising from

it is due depends on differential acceleration in an object.

The

inertial law is just that motion which an object will move in without any

contacting forces or objects hitting it. Contacting 'forces' may include

magnetic fields or electrostatic repulsions, or internal differential movements

that exert a force. One must further postulate that

the contacting force must be caught in the act of imposing itself, i.e., caught

in the act, in order not to be confused with the natural inertial law for the

object. Further, the fundamental nature of such motion that results from the

inertial default law for the object, and the motion that results from geodesic

accelerations imposed by the presence of other objects is indistinguishable in

the case of non-differential acceleration.

The other masses, therefore, modify the local law of default inertia, by

mathematical rules operating on the motion path of the object and the values of

the surrounding masses. To attribute the resultant path to 'forces' is a pure

assumption. Therefore, we must account for a non-differential acceleration

component and a differential component in every acceleration.

Non-differential acceleration is sometimes called 'frame-dragging' or

'gravitomagnetism'.

Here is an analogy. Consider that a irreducible mass

particle in free space is really a quantum sized spaceship. Consider also that

this ship is on automatic pilot and that the course of it is determined by the

onboard computer and the ship's sensors. It has limitless energy at its

disposal, and no exhaust. It just moves as the auto-pilot dictates. The

computer takes the sensor readings of local masses and computes the path in

space to move based on the size and motion of the other masses. The ship then

moves without any force from the other masses making it move! What the computer

did was solve the "N-body" problem for its own motion, and then moved per the

solution. Here is the general formula. It is only correct if none

of the masses in the n-body system is the earth.

Equation 1:

Where

is the gravitational constant,

is the gravitational constant,

. Let

. Let

stand for just

one particular mass particle. The general equation reduces to

equation 2 after dividing out

stand for just

one particular mass particle. The general equation reduces to

equation 2 after dividing out

.

.

Equation 2:

The acceleration vector for mass particle

is

is

.

.

is the total number of other

particles acting on

is the total number of other

particles acting on  .

.

holds place for the masses of the

other particles.

holds place for the masses of the

other particles.  holds

place for the vector positions of all the other particles.

holds

place for the vector positions of all the other particles.

is the vector of mass particle

is the vector of mass particle

. The reason for the

third power in the denominator is to divide the

. The reason for the

third power in the denominator is to divide the

by its own length

by its own length

. This turns it into a unit

vector imparting only the directional component to the summation. It

leaves the second power of the length

. This turns it into a unit

vector imparting only the directional component to the summation. It

leaves the second power of the length

, which is the distance between

each mass and

, which is the distance between

each mass and  . Thus the

formula is Newton's Law:

. Thus the

formula is Newton's Law:

. Our

quantum mass ship's auto-pilot accelerates

. Our

quantum mass ship's auto-pilot accelerates

. With

each quantum transition of time, the ship re-computes and alters its

trajectory. This is all done without any forces. It does require information

and computation. It ascribes computational intelligence to the structure of

the universe. All the mass particles in the universe are blindly computing

automata pre-designed by God to behave just so. There is no need for 'forces'

to explain their behavior. They move according to laws, not according

deterministic forces.

. With

each quantum transition of time, the ship re-computes and alters its

trajectory. This is all done without any forces. It does require information

and computation. It ascribes computational intelligence to the structure of

the universe. All the mass particles in the universe are blindly computing

automata pre-designed by God to behave just so. There is no need for 'forces'

to explain their behavior. They move according to laws, not according

deterministic forces.

If

I may branch into theology here for a minute, I would ascribe the attempt of

scientists who try to find some kind of 'fundamental force' for everything as a

kind of idolatry. The emphasis is on 'try'. It is like they want the a

simpler explanation for every complex observation.

A certain interpretation of

Ockham's Razor is followed that says that simplicity is probably always the

right explanation. While this is a good rule when one actually has more than

one explanation of equal probability to choose between, it is no good when one has no explanation

whatsoever. With no knowledge of how something works, it may as well be

complex as simplistic in nature.

The following point about Ockham's razor needs to be understood:

| Ockham's razor is

not

equivalent to the idea that "perfection is simplicity".

Albert Einstein

probably had this in mind when he wrote in 1933 that "The supreme goal

of all theory is to make the irreducible basic elements as simple and as

few as possible without having to surrender the adequate representation

of a single datum of experience" often paraphrased as "Theories should

be as simple as possible,

but no simpler."

Or even put more simply

"make it simple, not simpler".

It often happens that the best explanation is much more complicated

than the simplest possible explanation because its postulations amount

to less of an improbability. Thus the popular rephrasing of the

razor - that "the simplest explanation is the best one" - fails to

capture the gist of the reason behind it, in that it conflates a

rigorous notion of simplicity and ease of human comprehension. The two

are obviously correlated, but hardly equivalent.

Source. |

Let me restate that last point. A more complicated theory that is

based on explanations more probable is more likely true than a simpler theory

based on explanations less probable. For example, Newton's law of

gravity fails to accurately predict the orbits of stars around their galactic

centers. This problem has been known since 1959.

Source.

Popular evolutionary cosmologies proposed 'dark matter' and 'dark energy' to

overcome this problem. They propose an unseen, and undetected

presence of a 'halo' of dark matter around the outside of galaxies that is

totally transparent to electromagnetic radiation. Astrophysicists

have found no trace of this hypothetical dark matter, yet they need it to pull

the galactic arms around the galaxy. Without the dark matter, the

galactic arms would quickly randomize and dissipate. They pretty spiral

structures we see should not be there according to Newton's Law. Yet

they are.

Which is more likely? Is the basic Newtonian formula for gravity

incorrect on cosmic scales, or is the formula correct? If it is

correct, then we must propose 'dark matter'. If it is incorrect,

then we need no dark matter. We must therefore weigh the probabilities.

Let the probability of dark matter be:

And let the

probability of a modified gravitational law be:

And let the

probability of a modified gravitational law be:

. Then

we must ask which is more likely:

. Then

we must ask which is more likely:

.

Since, no dark matter has ever been seen, its probability is low. There

are historical precedents for this. Once it was thought that there

was a planet named Vulcan between the Sun and Mercury. This was

assumed to explain an anomalous 43 arcsecond precession of Mercury's perihelion

per century. Einstein was able to explain it by using the new theory

of General Relativity to modify Newton's gravitational law. After

this, there was no need for the dark planet that no one could see.

What then is the probability that the law of gravity is different at cosmic

scales? Well, the law of gravity has been tested locally, and near

the sun, but we cannot test it at cosmic scales. So we have little

empirical data of cosmological gravity. The idea that it could be

different has nothing against it, except perhaps a philosophical commitment to

the idea of naturalistic "simplicity". However, the probability

against 'dark matter' only increases with each failure to empirically observe

it. There is no growing list of negative observations for a modified

gravity law at a cosmological scale. Since this is the case, the

probability of more complexity in the gravity law is greater than that for dark

matter:

.

Since, no dark matter has ever been seen, its probability is low. There

are historical precedents for this. Once it was thought that there

was a planet named Vulcan between the Sun and Mercury. This was

assumed to explain an anomalous 43 arcsecond precession of Mercury's perihelion

per century. Einstein was able to explain it by using the new theory

of General Relativity to modify Newton's gravitational law. After

this, there was no need for the dark planet that no one could see.

What then is the probability that the law of gravity is different at cosmic

scales? Well, the law of gravity has been tested locally, and near

the sun, but we cannot test it at cosmic scales. So we have little

empirical data of cosmological gravity. The idea that it could be

different has nothing against it, except perhaps a philosophical commitment to

the idea of naturalistic "simplicity". However, the probability

against 'dark matter' only increases with each failure to empirically observe

it. There is no growing list of negative observations for a modified

gravity law at a cosmological scale. Since this is the case, the

probability of more complexity in the gravity law is greater than that for dark

matter:  .

.

What is probable depends a lot on what one's observations are, or what

observations one is willing to accept. However, we must not feel compelled

to accept a theory just because it is simple. It may be that even

though simple, and entertaining fewer assumptions in a quantitative sense, that

the few assumptions it does make are qualitatively poor. In other

words, the improbability of a few assumptions is greater than the improbability

of the more complex alternative. We must also keep in mind that reasoning

from divine revelation about the universe influences what we think is probable

or not. If one makes the mistake of using naturalism as a starting point,

then there is a bias against complexity. Complexity is what we

expect with a designer. Simplicity is what we expect otherwise.

Thus one's philosophy and beliefs do affect what one is willing to consider

probable. Our second axiom is that the Creator

incorporated pleasing aesthetics into his creation. This axiom supports

the modified gravitational law idea and not the dark matter idea.

Dark matter is un-aesthetic. It is uneconomical brute force solution, and

there is nothing in this idea that explains the preservation of galactic

structure. The aesthetic goal, of course, is to maintain the

pleasing spiral structure of the arms as the galaxy grows. While the

presence of dark matter in all the exact right locations has some explanatory

power, it does not explain how the dark matter came to be located in such a

convenient unseen position, and also creates additional improbability of why

only the pleasing pieces of matter light up and are seen, while the dark pieces

remain hidden. On the other hand, modified gravity makes sense

from both an engineering point of view and an aesthetic one without needless

multiplication of assumptions. Not only is dark matter needed, but

dark energy has been proposed also. That's not parsimonious.

In

fact science has found no fundamental 'force' of gravity. There is no force

observed. Objects just move in relation to other objects following

mathematical rules! They may say that an effect implies a force, but this is no

empirical explanation. It is merely a hypothesis that has not be verified. The

effect may in fact be a result of the computational analogy I just proposed.

Without the real explanation, the only difference between my analogy and the

standard explanation using 'force' is that I assume more complexity in the

answer. My answer is closed to the agnostic and atheist who believes in chance

and blind force because of his or her philosophical exclusion of the complex and

intelligent answer. The real nature of the universe may be that God loaded

infinite computational ability into its constituent parts.

Since no force is observed, one cannot really invoke parsimony to explain it.

If a force was clearly evidenced propagating at a distance, and only complex

hypothesis were counter offered, then Ockham's Razor would apply. Without

empirical evidence offered for gravity need not assume the explanation proposed

by naturalistic philosophy, which all too often is equated with science.

General Relativity wants to keep all laws of the universe invariant everywhere.

Why is this? Could it be that varying laws might imply the need of

intelligence to govern them and the way they work together? Let us take the

basic law of gravitation:

. The

acceleration of a mass toward a

second mass depends

on a constant divided by the distance between them squared. So we see that the

result of the law varies with the distance between the masses. Do you notice

something funny about this equation? The acceleration of the first mass does not

depend at all on how much mass it has itself, but varies according to the mass

of the other object. Even though the law may be written the same way every

time, it gives varying results. To say that invariance is a rule then is to say

that the mathematical form of the law must remain invariant. But if the law is

disconnected from the results, which are variant, then this implies a law giver

separate from the physics of the universe! (On the principle that a law

can only be separated from physical phenomenon on the assumption of a Creator.) Further, it is also a self refuting

principle, in that case, because a law giver can prescribe restricted domains

over which his law will work, and then change its form for other domains!

So if there is no creator, then the law cannot be separated from the physical

phenomenon, which are variant. But if there is a Creator, then it

can be separated from the physical phenomenon, and thus can be made to vary in

principle by the Creator. The law is nothing other than the word of the

Creator, which is the wisdom that was with him always.

. The

acceleration of a mass toward a

second mass depends

on a constant divided by the distance between them squared. So we see that the

result of the law varies with the distance between the masses. Do you notice

something funny about this equation? The acceleration of the first mass does not

depend at all on how much mass it has itself, but varies according to the mass

of the other object. Even though the law may be written the same way every

time, it gives varying results. To say that invariance is a rule then is to say

that the mathematical form of the law must remain invariant. But if the law is

disconnected from the results, which are variant, then this implies a law giver

separate from the physics of the universe! (On the principle that a law

can only be separated from physical phenomenon on the assumption of a Creator.) Further, it is also a self refuting

principle, in that case, because a law giver can prescribe restricted domains

over which his law will work, and then change its form for other domains!

So if there is no creator, then the law cannot be separated from the physical

phenomenon, which are variant. But if there is a Creator, then it

can be separated from the physical phenomenon, and thus can be made to vary in

principle by the Creator. The law is nothing other than the word of the

Creator, which is the wisdom that was with him always.

So now, on the assumption that the Creator placed earth at the center of his

creation, we propose a law of gravity that does not conflict with it.

We let the center of our coordinate system be

, the point of origin.

The law is then constructed so that the point of origin never has any

acceleration, or that if points connected to it do, they are cancelled out by

equal and opposite accelerations. For a mass particle in the earth

, the point of origin.

The law is then constructed so that the point of origin never has any

acceleration, or that if points connected to it do, they are cancelled out by

equal and opposite accelerations. For a mass particle in the earth

, the acceleration

, the acceleration

is:

is:

,

,

This is with respect to N bodies in relation to our mass particle that are more

distant from the point of origin than our mass particle. This

condition is stated under the summation sign. The formula is half of

the relation between two mass particles. Here is the formula for the

acceleration of the more distant mass particles, where

is now the more distant mass

particle, and

is now the more distant mass

particle, and  is its

acceleration:

is its

acceleration:

Now observe the term

and

and

. These scaling terms

take the total acceleration between two particles and divide it up in a linear

fashion. The particle closer to the origin ends up with only a small part

of the total and the particle farther away ends up with the greater part of the

total. When

. These scaling terms

take the total acceleration between two particles and divide it up in a linear

fashion. The particle closer to the origin ends up with only a small part

of the total and the particle farther away ends up with the greater part of the

total. When  then the first

term approaches 1 and the second 0. In both cases the formula's reduce to:

then the first

term approaches 1 and the second 0. In both cases the formula's reduce to:

The scaling term

represents the x

component distance of the short vector from the origin divided by the length of

the long vector:

represents the x

component distance of the short vector from the origin divided by the length of

the long vector:  .

This allows us to divide the acceleration so that it diminishes to zero as one

approaches the point of origin for the near particle. The greater part of

the accelerations are committed to the far particles.

.

This allows us to divide the acceleration so that it diminishes to zero as one

approaches the point of origin for the near particle. The greater part of

the accelerations are committed to the far particles.

One might be tempted to think that all of the acceleration put on a particle in

the earth should be diverted to the attracting mass outside the earth, but this

is not needed with this formula. Some of it is kept back to create

the forces that cause tides. All these forces cancel out

because they are symmetric about the origin. The scaling term takes on a

negative value for particles opposite the origin from the 'attracting' particle.

In the usual Newtonian formula, the acceleration of one mass is determined by

the the other mass divided by the distance squared:

.

But here for the far particle, the acceleration that would be due to the

particle closer to the origin in Newton's formula is now taken and diverted to

the far particle:

.

But here for the far particle, the acceleration that would be due to the

particle closer to the origin in Newton's formula is now taken and diverted to

the far particle:  .

A linearly determined amount is left with the particle near the origin.

The last term,

.

A linearly determined amount is left with the particle near the origin.

The last term,  ,

merely points the acceleration in the right direction with a unit vector.

,

merely points the acceleration in the right direction with a unit vector.

What happens physically is this. The Sun goes around the earth

accelerating toward the earth as much as the earth accelerated toward it in the

Copernican model. The sun has a tangent velocity of 30

km/second, so even though it accelerates toward earth, the tangent velocity

keeps it at a constant nearly circular radius. Since the earth at

the point of origin, at rest, the acceleration based on the sun's mass that is

due to the earth is the small amount of differential acceleration needed to

cause tides. As a particle approaches the vertical plane through the

earth's origin, tangent to the sun, its perpendicular distance to the plane

diminishes to zero, and along with it the small part of the acceleration reduces

to zero. On the far side of the earth, the scaling factor is negative, and

the sun accelerates more toward it greater than factor 1 times its mass.

This causes tidal force symmetric about the origin without moving it.

A naturalistic philosopher might tell us that it is ad hoc to modify Newton's

formula. But then if the Creator told us that the earth was a special case

in the physics of the universe, we would be justified in the probability that

the laws are modifiable to meet that special case. My

modification here is not the last word on gravity. It is just an exercise

to show what is possible, and to stimulate some thinking.

Because God is the Engineer in Chief, he can tailor make physical laws for any

special situation he likes. He can even jump into the middle of his

running program we call Creation and modify what he likes. He did so

at the time of the Flood, and also at the time of the Fall. He plans

to intervene again in the end of days. Most of the Cosmologists on

earth then will be shaking their heads and heading for caves.

The Rotating Cosmos

It would seem that a rotating cosmos would be a non-inertial frame of reference

and that we would be able to detect it by the deviation of a mass from a

'straight' line of motion. Don't confuse this with in inertial frame

of reference, which is a frame moving at a constant velocity.

Every point fixed with respect to a rotating cosmos is non-differentially

accelerating in a circle. Yet if we reformulate the law of

inertia so that the inertial rest frame is defined to track the rotation of the

cosmos, then we would not be able to detect the rotation by means of a gyroscope

or by assuming straight line motion. For the inertial motion would

be in a curve.

Further, if the earth were stationary inside such a rotating frame, and the

rotating frame was the inertial frame, such that the default path of masses with

no outside forces acting was to rotate around the earth in 23 hours and 56

minutes, then we would be quite wrong to conclude that the change in orientation

of a gyroscope or say a Foucault pendulum over the period of one cosmic rotation

meant that the earth rotated. This possibility has led General Relativists,

who in pursuit of the Equivalence Principle, to propose that the default

inertial frame of the universe is in fact defined by the average motion of all

the mass in the universe. And if the Cosmos is given a rotating motion around

a stationary earth frame, then the rotating cosmos is the motion of the average

mass, and therefore defines the default inertial path. Other explanations have

been suggested, such as 'frame dragging' etc.

Intuitively, to have something move by default in a curve seems more complex

than a line. Yet everything in the universe seems to move in curved paths

from atoms to ships at sea. Do we have to come up with a set of

forces to explain all the motions, or is it enough to be able to describe the

motions in sufficient degree to make them predictable? Further,

intuition might lead one to think the earth is flat, when in fact, the truth is

a curve. We should not assume that the law of inertia is simple.

It may even vary significantly in regions near black holes.

Let  represent

gravitational acceleration resulting from the earth's mass alone and the

satellite, without any adjustments for cosmological rotational effects on the

acceleration. The value of this is

represent

gravitational acceleration resulting from the earth's mass alone and the

satellite, without any adjustments for cosmological rotational effects on the

acceleration. The value of this is  on the

earth's surface, but it varies with distance from the center of the earth (

on the

earth's surface, but it varies with distance from the center of the earth ( )

according to:

)

according to:  .

.  is the

gravitational "constant", and

is the

gravitational "constant", and  is the

mass of the earth in kg.. The double bars around

is the

mass of the earth in kg.. The double bars around  mean 'the

magnitude of' or length of the vector

mean 'the

magnitude of' or length of the vector  , since a

vector expresses both direction and magnitude. In this calculation

, since a

vector expresses both direction and magnitude. In this calculation  points

down to the center of the earth, and.

points

down to the center of the earth, and.

Let  represent

the resultant graviational acceleration for any given spot after factoring in

the cosmological effect of the rotating universe.

represent

the resultant graviational acceleration for any given spot after factoring in

the cosmological effect of the rotating universe.

When the

coordinate center of a cosmologically sized rotating gravity field and a local

gravity field, (at rest with repsect to the rotating gravity field), coincide,

then:

(1)

where

is a accelaration

cancellation effect of the rotating cosmological gravitational

field upon the local gravitational accelaration

is a accelaration

cancellation effect of the rotating cosmological gravitational

field upon the local gravitational accelaration  .

The physical interepretation of this is that

.

The physical interepretation of this is that  reduces

the gravitational acceleration of the local field for objects at rest with

respect to the local field. Using geometry,

reduces

the gravitational acceleration of the local field for objects at rest with

respect to the local field. Using geometry,  is

the frame of reference inverse of centripital acclearation. Therefore, it is

equal to the angular velocity squared times the radius. The magnitude of the

acceleration cancellation vector equals the angular velocity squared times the

radius from the center of the rotation point:

is

the frame of reference inverse of centripital acclearation. Therefore, it is

equal to the angular velocity squared times the radius. The magnitude of the

acceleration cancellation vector equals the angular velocity squared times the

radius from the center of the rotation point:  .

The angular velocity is expressed by:

.

The angular velocity is expressed by:  . The

universe makes a complete circle

. The

universe makes a complete circle  radians

(or 360 degrees) in

radians

(or 360 degrees) in  seconds,

which is the siderial time for a complete rotation of the heavens about the

earth (23 hours 56 minutes). It is four minutes less than a solar day since the

sun moves with respect to the heavens also.

seconds,

which is the siderial time for a complete rotation of the heavens about the

earth (23 hours 56 minutes). It is four minutes less than a solar day since the

sun moves with respect to the heavens also.

The

value of the product of  and

and  is

actually known more accurately then either. It is expressed by

is

actually known more accurately then either. It is expressed by  . So we

will substitute is in

. So we

will substitute is in  for G

and M.

for G

and M.

For

the geostationary satellite, we will want the formula  . Also

the net accelarating force on the satellite with respect to the earth is

. Also

the net accelarating force on the satellite with respect to the earth is  . We now

make substitutions into

. We now

make substitutions into  for the

values of

for the

values of  ,

,  , and

, and  . We

obtain:

. We

obtain:  . We

solve this equation for

. We

solve this equation for  , which is

merely a matter of algebraic manipulation. First multiply both sides by

, which is